Algorithms for Large-Scale Data Management

Overview

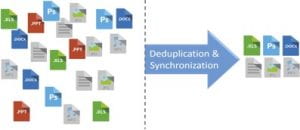

Data management is a critical problem in the age of the cloud. Thousands of petabytes of data are backed up in cloud; each time a user makes a change to a local file, the cloud version must be synchronized to remain current. Such an operation occurs billions of times a day. The bandwidth costs are enormous, justifying the need for a highly efficient synchronization algorithm that carefully transmits only the minimally necessary changes over the network.

Our work proposes a novel synchronization algorithm based on deft use of tools from coding theory and interactive communication. These tools are designed to minimize unnecessary overhead. The synchronization algorithm first discovers small segments of data with changes (between the updated and older versions of a file) and uses a famous error-correcting code to “fix” (i.e., update) the older versions one-by-one.

Recent results

Our algorithm recovers from a large number of edit errors in the order-optimal way in terms of consumed bandwidth. The algorithm was initially designed and analyzed for special cases of theoretical interest (files generated according to uniform probability distributions affected only by deletions.) Recently, we have extended our work to more realistic files affected by insertions and deletions. We have developed an end-to-end implementation of our algorithm and demonstrated its efficacy versus existing tools such as rsync. We have also extended the framework to applications where approximate synchronization is sufficient, thus further reducing required bandwidth. Current research includes network synchronization, data deduplication, reconciliation, and information retrieval.

Our algorithm recovers from a large number of edit errors in the order-optimal way in terms of consumed bandwidth. The algorithm was initially designed and analyzed for special cases of theoretical interest (files generated according to uniform probability distributions affected only by deletions.) Recently, we have extended our work to more realistic files affected by insertions and deletions. We have developed an end-to-end implementation of our algorithm and demonstrated its efficacy versus existing tools such as rsync. We have also extended the framework to applications where approximate synchronization is sufficient, thus further reducing required bandwidth. Current research includes network synchronization, data deduplication, reconciliation, and information retrieval.

Recent publications:

- F. Sala, C. Schoeny, N. Bitouze, and L. Dolecek, “Synchronizing Files from a Large Number of Insertions and Deletions,” IEEE Transactions on Communications, vol. 64 (6), pp. 2258 — 2273, June 2016.

- L. Conde-Canencia, T. Condie, and L. Dolecek, “Data Deduplication with Edit Errors,” IEEE GLOBECOM Conference, Abu Dhabi, UAE, Dec. 2018.

- S. Tabatabaei and L. Dolecek, “A Deterministic, Polynomial-Time Protocol for Synchronizing from Deletions,” IEEE Transactions on Information Theory, 2014.